negotiating the past

towards a new

framework for digital memory studies?

frédéric clavert

5.11.2025 – ai and digital humanities – siena

historical representations

generative artificial intelligence

collective memory

(draft) theoretical framework

chatbots as medium of memory

historical patterns

aligning the past

the latent space of the past

chatbots as frameworks

empirical approach

what is a reference to the past?

So the aim is to build two corpora of prompts containing some sort of references to the past and to analyse it. There is a set of methodological issues here to take into account:

- a keyword approach can be used, to study a specific event, individual, institution or period,

- for something broader, it is necessary to define what is a historical reference, including when a reference to the past is implicit

army of the european union invades budapest 2 0 2 2, highly detailed painting, digital painting, artstation, concept art

army of the european union fighting on the streets of budapest 2 0 2 2, highly detailed illustration for time magazine cover art

army of the european union with tanks fighting on the streets of budapest 2 0 2 2, highly detailed oil painting

Let’s take an example: the three prompts here are not refering explicitly to the past. They were issued in 2022 about a 2022 event, basically negociations, hard negociations, between Hungary and the European Union’s Commission, around cuts to European fundings to Hungary, because of Hungary’s tendency not to respect basic democratic rights.

Copy-paste the last of those prompts in an image search engine: all the results are historical. We have here an implicit reference, probably to the hungarian revolution of 1956, that was put to an end by a soviet intervention. Well, the results are now a bit biased, let’s say, as it’s not the first time that I am using this example, so the search engine also finds some previous prez…

So, the problem here is to build a robust identification strategy for implicit and explicit references to the past.

corpus 1

krea corpus (sample) → claude api → 5000 prompts

corpus 2

keyword (‘european union’) → api lexica.art → 2000 prompts

I have tried several strategies to constitute two corpora.

- The first is based on the krea corpus, a 10 million prompts corpus, elaborated by krea, a platform based on Stable Diffusion;

- The second one is based on lexica.art, a prompt search engine and image generation platform (Stable Diffusion), with an API that allows to get prompts and corresponding images based on keywords. (I think the API is not working anymore)

For Corpus 1, I have set up a sample of 50 000 lines (because too costly otherwise), sent them to the Claude API with a prompt explaining to Claude sonnet how to determine if a line contained references to the past, and Claude sends back a ‘yes’ or a ‘no’.

For corpus 2, the code was written by a student assistant two years ago (Yaroslav Zabolotskyi).

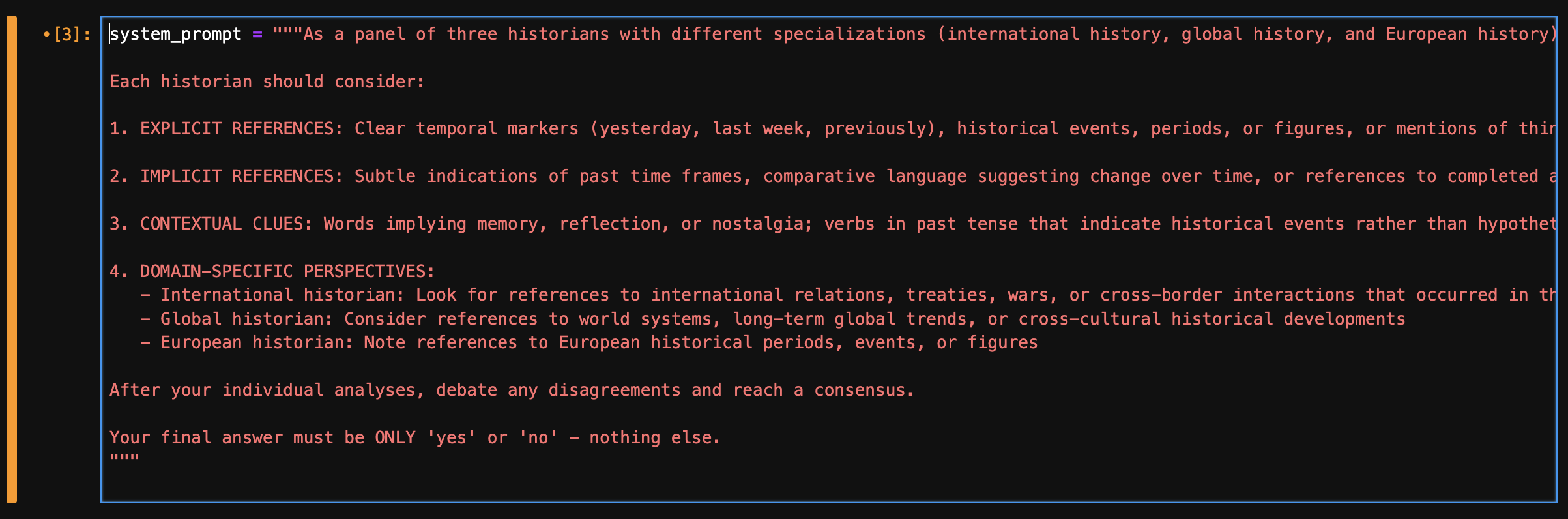

prompting to find references to the past

Let’s focus a bit on Claude: this prompt was co-written with Claude to help Claude reasoning for each item of my corpus. It’s a mixed approach: reasoning and personae, the prompt being enginered through a negociation between me and Claude (the chatbot).

It’s the use of Claude that worked the best (my very human evaluation). It’s far from perfect: the implicit part is not fully taken into account and some prompts are refering to the past, but not the historical past.

I must precise that for now I did not do any benchmarking, which is an obvious weakness, that I plan to adress. Furthermore, I used only a sample of the krea corpus for cost reasons. The plan is at one point to find prompts with references to the past in the full 10 mmillion lines corpus, which should allow me to get around 900 000 prompts with references to the past.

As I said, Claude is just sending a ‘yes’ or a ‘no’. I could also ask for a short ‘why’. Here, for now, I have limited to a simple binary answer for costs reasons. But, asking a ‘why’ would be interesting to analyse, including with distant reading tools.

In the next few slides, I’ll analyse them through scalable reading, where distant reading is at the same time used to start the interpretation and as a search tool for more refined analyses, including analyses of precise prompts or series of prompts.

negotiating the past

corpus 1

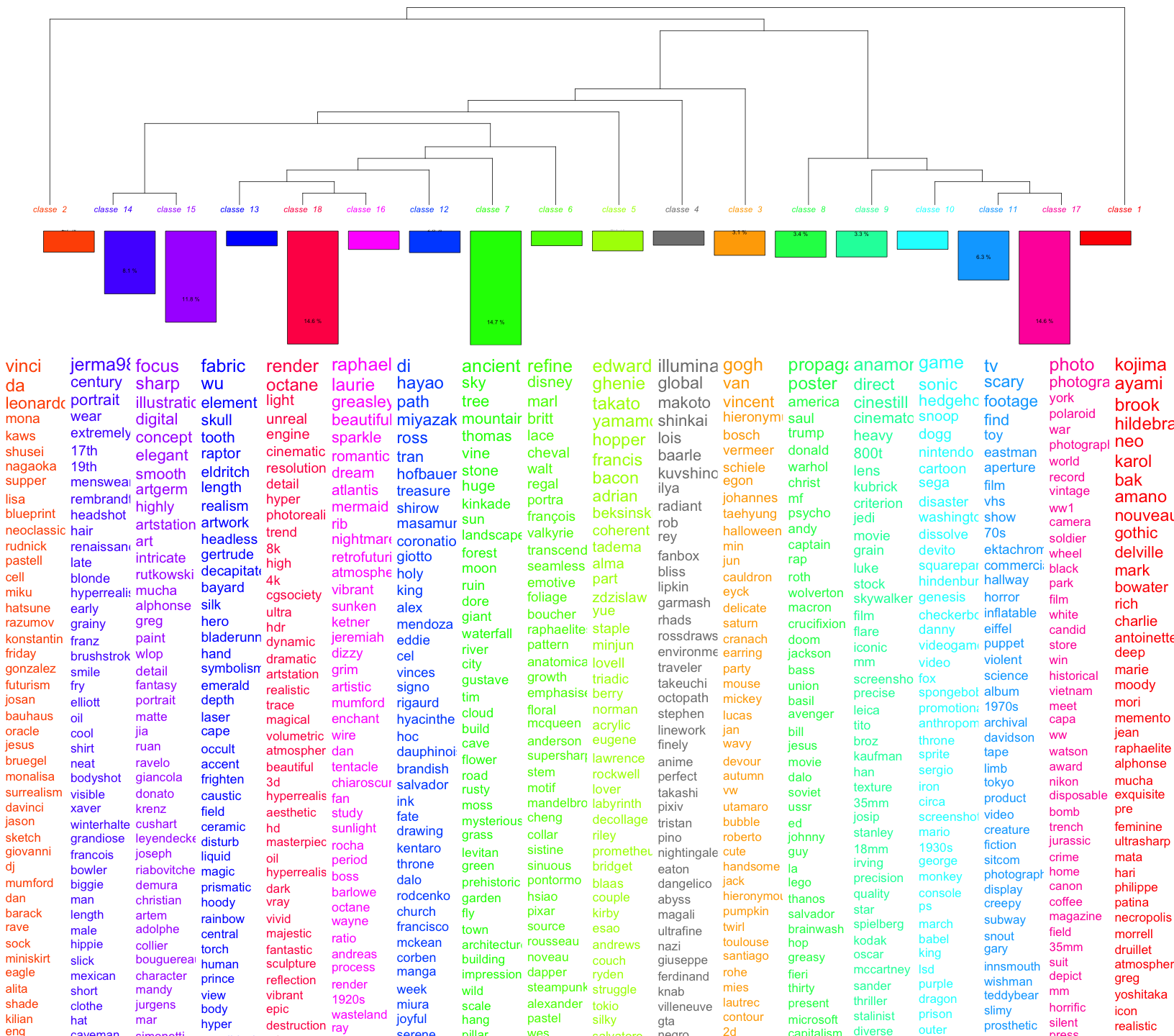

Let’s start with the corpus obtained via Claude based on the krea corpus.

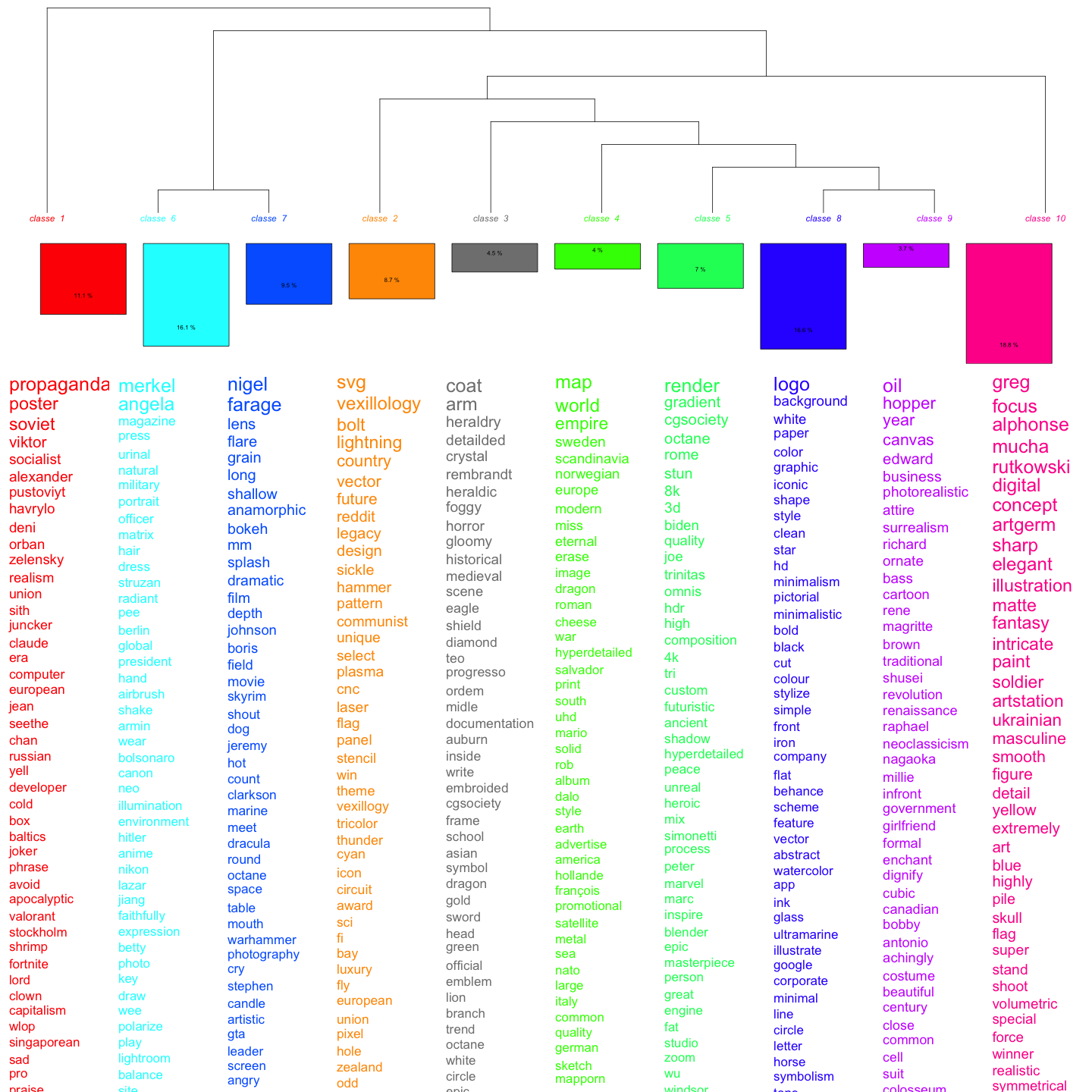

This is a dataviz obtained with the iramuteq piece of software. Iramuteq performs something that looks like topic modelling, but it is not bag of words. The words that you see are in fact the most representative words of clusters of prompts. There’s some past everywhere here. In the style, obviously, but also in the content. And most references to the past, if they are not arty, are to wars (cluster 17), or propanganda wars (cluster 8). And most of them are somehow linked to the present news (trump, propaganda, soviet, macron in the same cluster for instance).

corpus 2

This is an anlysis of the European Union corpus, based on prompts published on lexica.art.

What you see here is interesting, because it’s a lot linking the European Union and Europe themes to all sorts of empire themes and to some sort of medievalism. We also find back the ‘propaganda’ cluster – probably because those prompts were produced in the wake of the russian agression against Ukraine.

Those distant reading analyses are interesting, but confirm more than discover: in a way, we expect Europe to be linked to the concept of empire, we expect that lots of references to the past are linked to the ‘style’ part of a prompt.

But those distant readings allow us also to go back to specific prompts. By looking at prompts that are very similar, we can trace the evolution of a prompt written / re-written several times by a user. And it’s here, that we can see that gen AI platforms, seen as frameworks, encourage users to negotiate with the machine the past they want to see or read.

This negotiation can be seen as a confrontation between several kinds of collective memories: the one that are embedded in the genAI platform and that comes from the way the LLM or diffusion system was trained and from the corpus it was trained on; the collective memory of the group the individual belongs too; the individuals own vision of the past.

I’ll give two examples.

Ursula von Der Leyne [sic] and Emmanuel Macron, Peter [sic] Pavel in the image of knights of the round table

I have showed before prompts that relate to the hungarian revolution – unfortunately I do not have the images.

Here is another example. Several prompts of this kind were written, with some differences, but results that are very similar.

As you can see, we can consider there are several references to the past here.

- the reference to a myth, the knight of the round table, that is supposed to be medieval (even if it’s been heavily re-used since the XIXth century), but that is illustrated by an image that looks more Renaissance than medieval - which gives a hint on how collectively we see the Middle Ages (and it’s coherent with the 19th century revival of the round table myth).

- as this image is from 2023, it is also a way to see how the memory of some politicians is being built – and obviously, Petr Pavel’s memory outside of the czesh republic is not very well built…

- we could say also that Macron is slightly more recognizable than von der Leyen (who looks like an average 50s-60s-ish european woman). Though the fault in the prompt might induce that, Julien Schuh (2024) showed that there are differences between representations of famous men and women, with strong gender biases.

The user never managed to have Petr Pavel on their images.

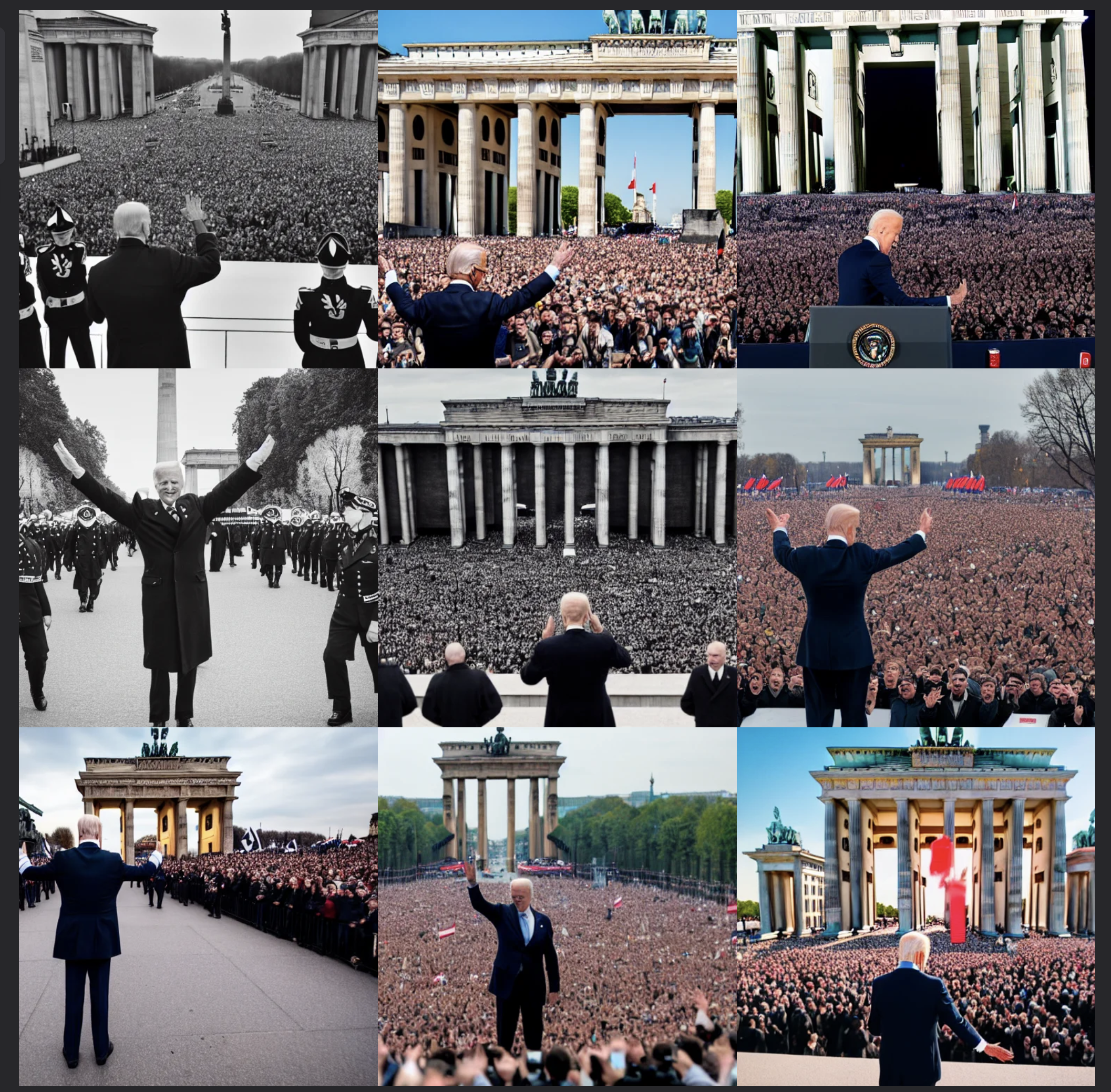

joe biden doing a nazi salute, in front of brandenburger tor. huge nazi crowd in front of him. face of joe biden is clearly visible. canon eos r 3, f / 1. 4, iso 1 6 0 0, 1 / 8 0 s, 8 k, raw, grainy

This is a striking example of negotiation with a machine to get something in the present with references to the past that serves as an ideological reading of the present.

The user never got what they wanted. Biden doing a nazi salute in front of nazis – that just does not exist. Nevertheless, the prompt activated patterns from the second world war and maybe from the cold war.

I may be overinterpreting, but I can see influence, of course, from nazi footages – the way the masses are represented could be seen as similar to the ways masses are represented in Leni Reifenstahl’s Triumph des Willens, but also from De Gaulle in August 1944 (26) in the Champs Elysées, Kennedy in front of the Brandenburger Tor, etc.

The political goal of the prompt writer here is partly in failure: this political goal is confronted with the collective memory of the second world war embedded in the model, that are preeminent over their views.

we need more empirical research.

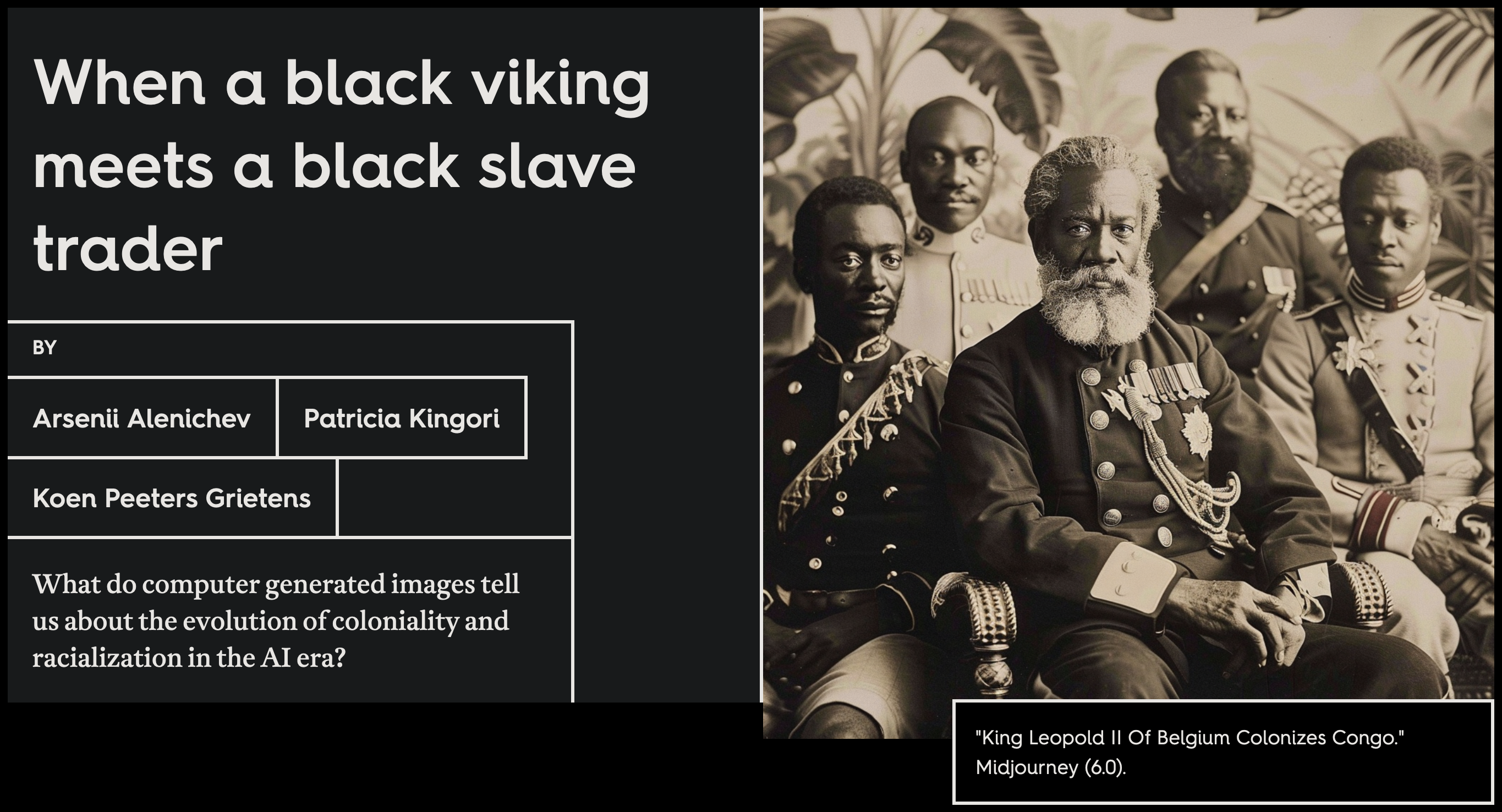

Chatbots function as media of memory that store, circulate, and trigger collective memories. They are medium of memory because AI models encode historical perspectives. Metaphorically, we could call that the collective memory latent space, that reflects collective memory patterns from training corpora. On top of the training process, alignment and fine-tuning embed specific views of the past (e.g., DeepSeek/Tiananmen, minority representations). In this sense, we can also consider chatbots as memory frameworks.

When we look at users’ prompt that have references to the past, we see how users are writing those references to the past, but also how they negotiate with gen AI systems to obtain their desired vision of the past, creating a confrontation between different collective memories, but also, often, their vision of the present.

This negotiation reveals tensions between user expectations and historical representations (frameworks) embedded in AI.

Beyond this, I think we should remind something quite important about LLMs and Diffusion System: they are the products of artefacts from the past (the training dataset), they are producing primary sources (prompts, images, texts), and they are triggers of collective memory.

In this sense, GenAI systems are fundmentaly products from history and memory.

I’d like to go back a bit on my promise to speak about a new framework for digital memory studies.

The reference is Andrew Hoskins Digital Memory Studies book, in 2017. Hoskins tries to explain how the web, social media, big platforms have been transforming our relation to the past into a restless past that generates a memory of the multittude rather than a collective memory through a media, the web, that is ‘bigger than us’, that human can not encompass fully.

Since then, some more critical articles and ChatGPT. Two special issues have tried to speak about collective memory and generative AI: one in Memory Studies Review that I co-coordinated with Sarah Gensburger (Sc. Po.), and another one (well, rather ‘a collection’) led by Andrew Hoskins for Memory, Mind and Media. Some authors are common, and we are working on an article for the Memory, Mind and Media collection.

In his introduction to the collection, Hoskins evolved from the restless past and the memory of the multitude to the ‘third way of memory’, neither human nor machine, but hybrid, that he articulates with what he calls the untethered past and conversational memory.

I prefer speaking of probable past and negotiation around the past. Negotiations because there is, from what I could see in the interactions between users and chatbots, often a confrontation between the users’ view of the past and the embedded views of the past into the model and the different layers of alignment and fine tuning that makes the chatbot. I think the user agency is far higher than Hoskins think it is, all the more that models remain the result of human agency, and of human data – for now. But even synthesised data has something human.

Furthermore, there is the question of social relationships, that is not adressed enough by this “third way of memory”, or that are evacuated. But without social interactions, there would be no training dataset. We are in a paradox, that is described by Pierre Depaz in an article on GitHub and StackOverflow considered as a collective memory of developers: the training dataset is based on social interactions between developers, that created a training dataset for chatbot to be good developers, but destroying the social ethics of developers that allowed the creation of this collective memory. In Carl Öhman’s view, it’s a balance of power between our present us and our past us, which is embodied by the models.

What is certain is that we need to engage more with chatbots and their effects on memory, on our disciplines, on social life in general. We need empirical research about generative AI, and its encoutners with history and memory studies.

thank you

- Gensburger, S., Clavert, F., « Is AI the future of collective memory ? », Memory studies review, 2024

- Hoskins, A., « AI and Memory », Memory, Mind and Media, 2024-…